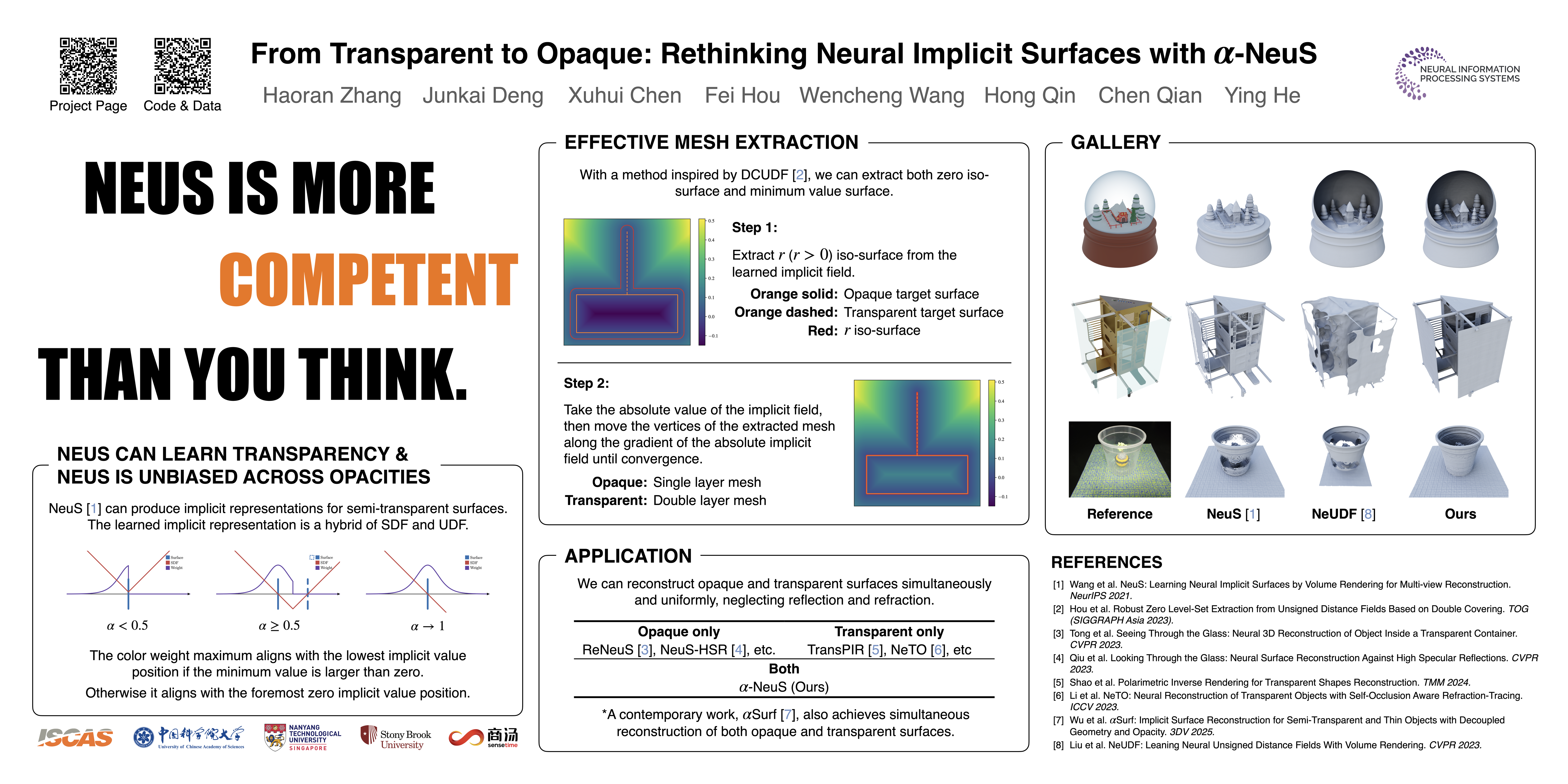

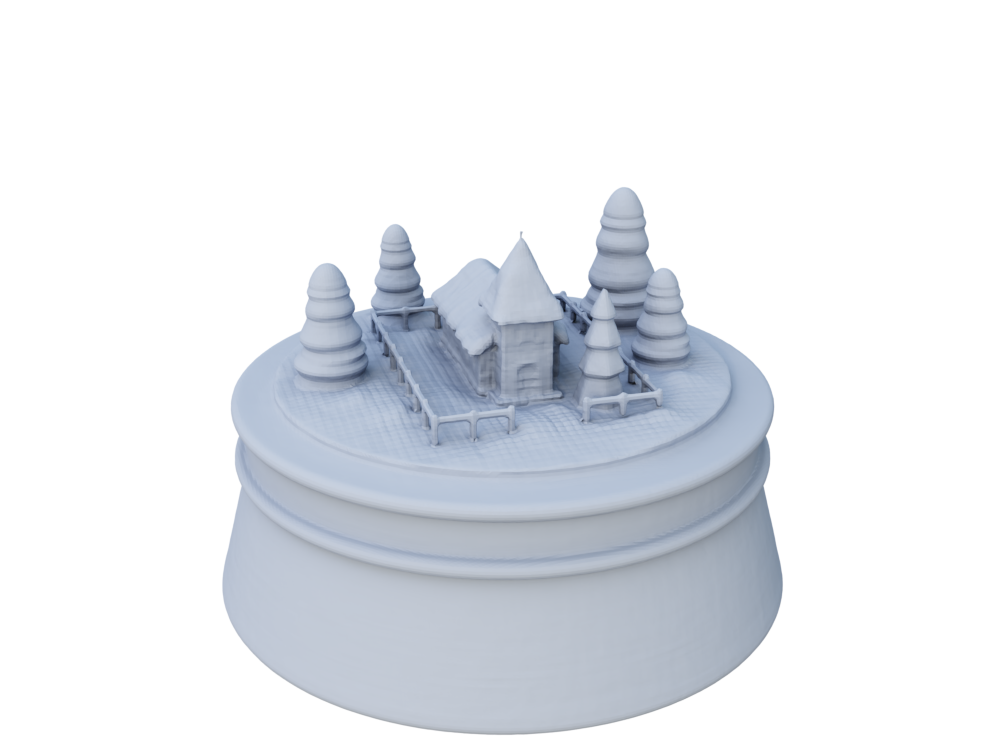

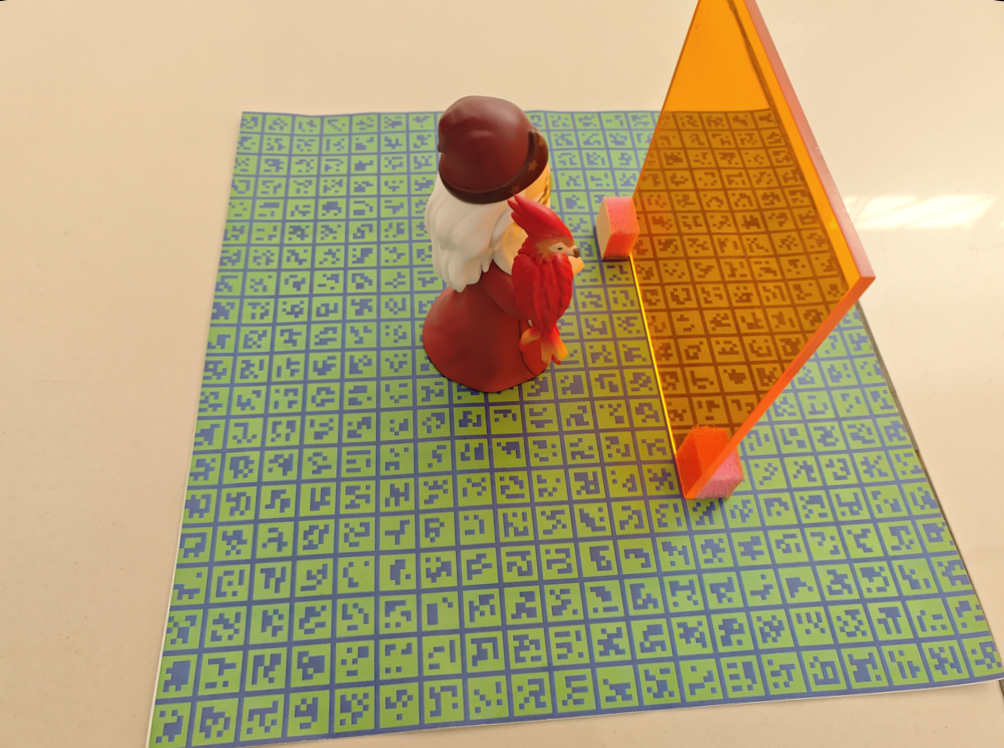

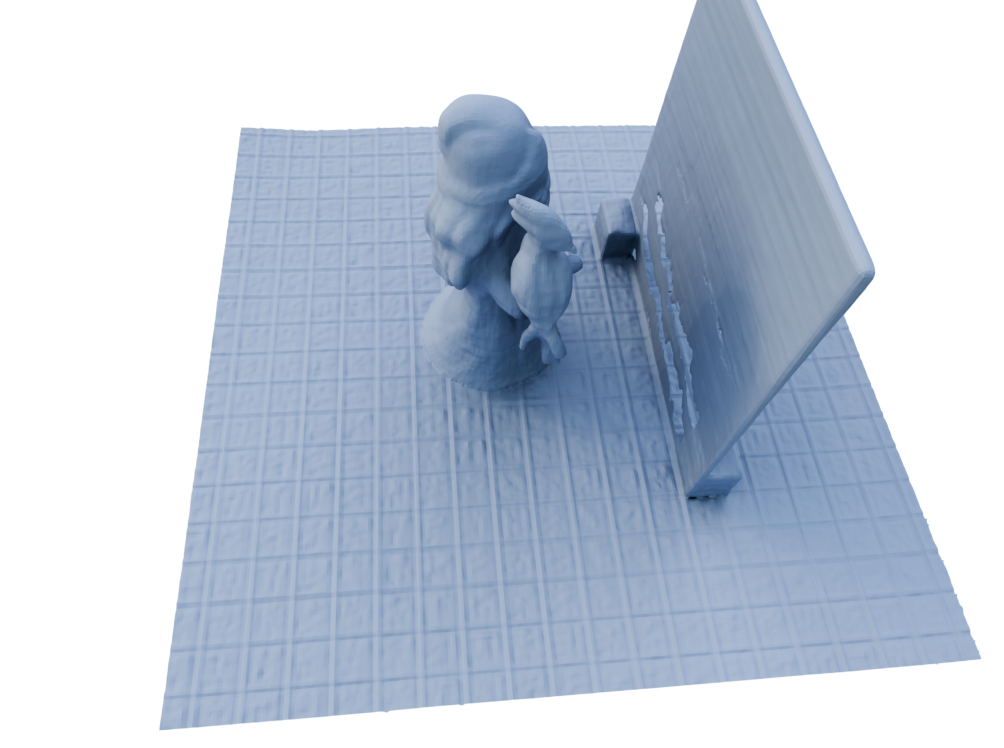

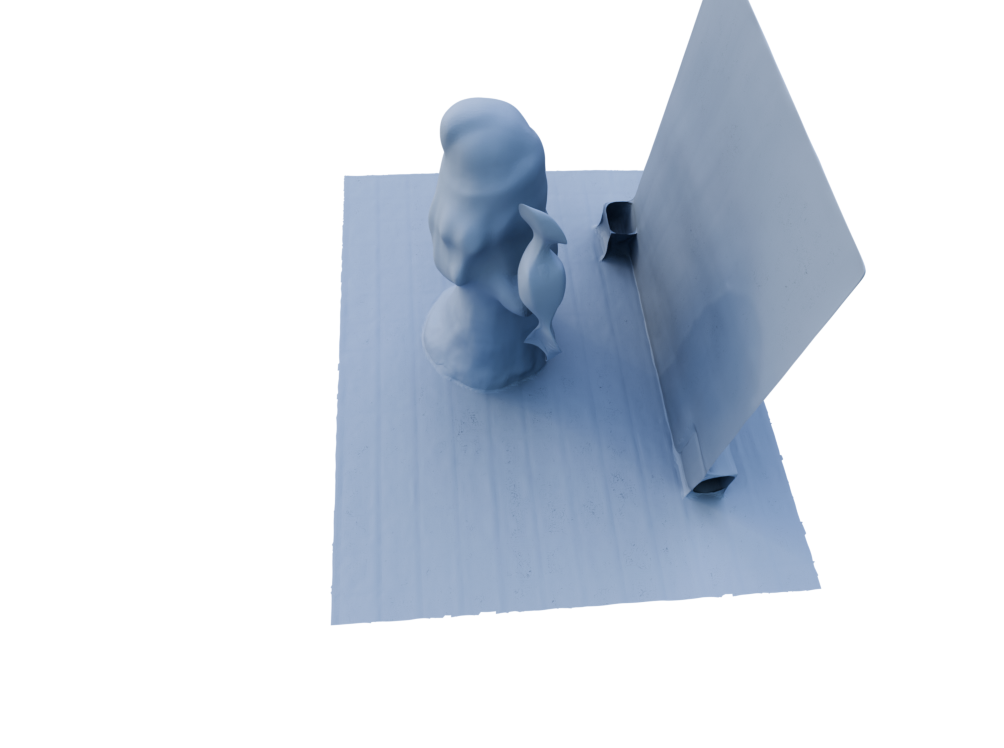

Visualization

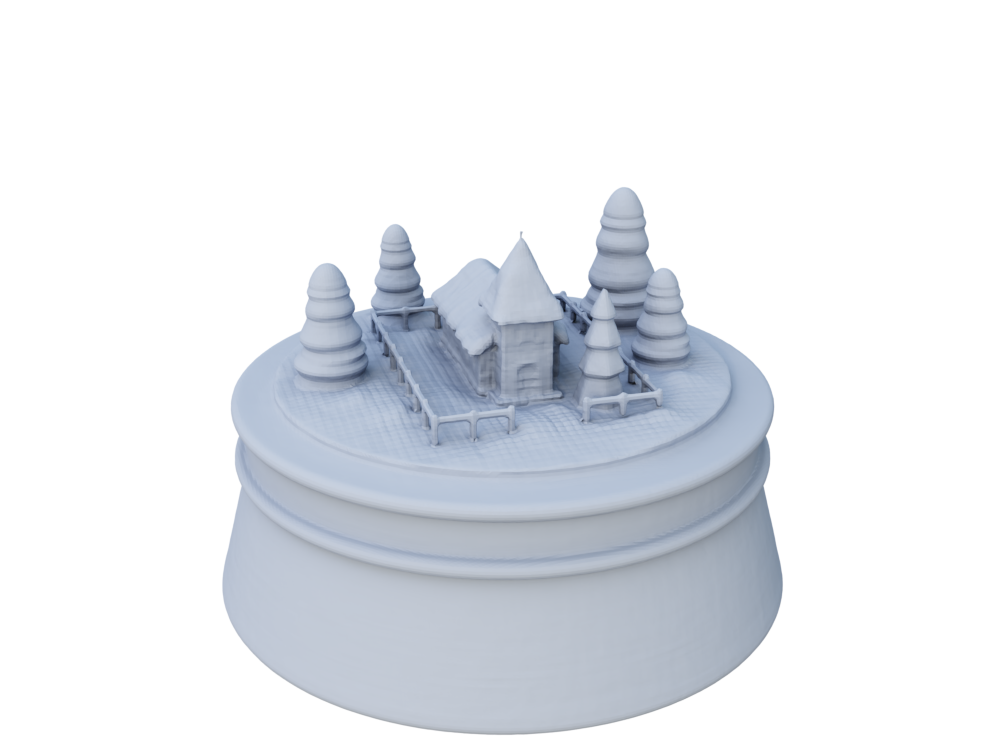

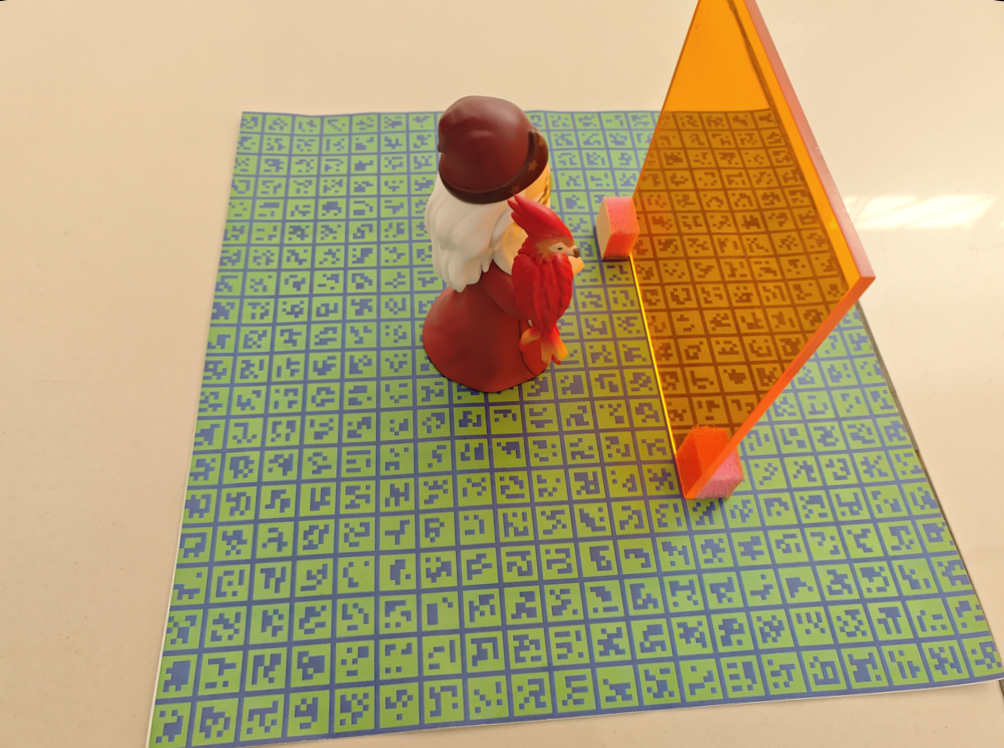

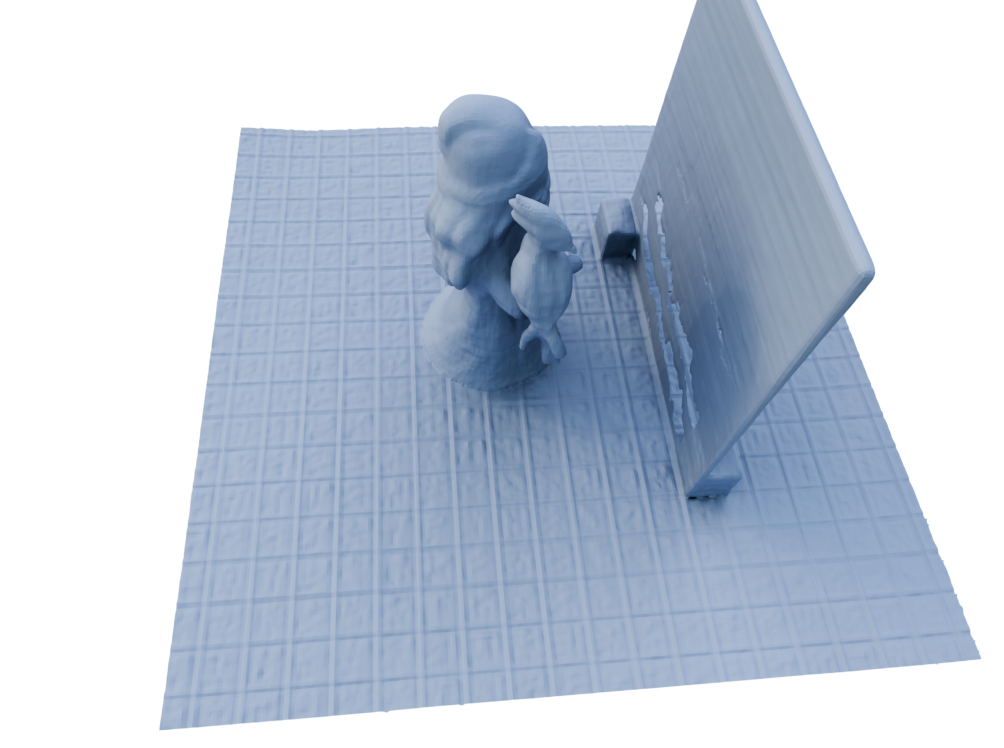

Traditional 3D shape reconstruction techniques from multi-view images, such as structure from motion and multi-view stereo, face challenges in reconstructing transparent objects. Recent advances in neural radiance fields and its variants primarily address opaque or transparent objects, encountering difficulties to reconstruct both transparent and opaque objects simultaneously. This paper introduces α-NeuS—an extension of NeuS—that proves NeuS is unbiased for materials from fully transparent to fully opaque. We find that transparent and opaque surfaces align with the non-negative local minima and the zero iso-surface, respectively, in the learned distance field of NeuS. Traditional iso-surfacing extraction algorithms, such as marching cubes, which rely on fixed iso-values, are ill-suited for such data. We develop a method to extract the transparent and opaque surface simultaneously based on DCUDF. To validate our approach, we construct a benchmark that includes both real-world and synthetic scenes, demonstrating its practical utility and effectiveness.

@inproceedings{zhang2024from,

title = {{From Transparent to Opaque: Rethinking Neural Implicit Surfaces with $\alpha$-NeuS}},

author = {Zhang, Haoran and Deng, Junkai and Chen, Xuhui and Hou, Fei and Wang, Wencheng and Qin, Hong and Qian, Chen and He, Ying},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

publisher = {Curran Associates, Inc.},

year = {2024},

}Several excellent works were introduced concurrently with ours, addressing similar challenges:

⍺Surf tackles the same issue of transparent surface reconstruction. It utilizes a Plenoxel-based approach, which is very fast.

NU-NeRF also focuses on transparent surface reconstruction and additionally supports reflection and refraction.

We encourage readers to check out these works as well.